THE LAB 32: hRequests vs anti-bots: a full benchmark

Excerpt

How does it perform against Cloudflare, Akamai, Datadome, PerimeterX and Kasada?

In one of the past articles, I wrote about hRequests (human requests), a Python package that enhances traditional HTTP requests with more features, like headless browsing, real TLS fingerprints from browsers, and many others.

We tested the hRequests package against Akamai, but today I want to dig deeper and see if we can bypass the most used anti-bots solutions like Cloudflare, Datadome, PerimeterX, and Kasada.

[

hrequests banner on GitHub

For this test, we’ll try to scrape the usual websites of our Hands-On article series, in order to get the same baseline. As a reminder, if hRequests bypassed our tests on these websites, doesn’t mean it will pass any website protected with the same anti-bot solutions. In fact, these software are highly customizable and could respond in a different way while having the same anti-bot installed.

On top, consider that, in this case, I’m testing hRequests from my laptop at the office, so I’m using a clean IP and the device fingerprint sent by the browser is legit.

Different setups could lead to different results but, with this article, I hope you can discover a new tool to add to your toolbelt and, with a proper setup, could help in your web scraping projects.

Given these premises, let’s see how the hRequests package performs against the most famous anti-bot solutions. The full code can be found on the GitHub repository reserved for paying users.

Akamai ✅

We’ve already seen this case in one post dedicated to Akamai and hRequests, but let’s recap what we’ve done.

We are testing against the website luisaviaroma.com, which has Akamai + reCAPTCHA.

[

Our plan to bypass this combo is:

- Load the homepage to grab the initial Akamai cookie and use a chromium headful window to bypass the reCAPTCHA.

session=hrequests.BrowserSession(headless=False)

url='https://www.luisaviaroma.com/'

akamai_test=session.get(url)

page = akamai_test.render(mock_human=True)

- Once the cookies are stored in the session, we can use them to browse the product’s pages headlessly.

while current_page<total_pages:

try:

url='https://www.luisaviaroma.com/'+language+'-'+country+'/shop/'+category+'&Page='+str(current_page)+'&ajax=true'

akamai_test=session.get(url)

print(akamai_test.text)

json_data=json.loads(akamai_test.text)

print(json_data)

products = json_data["Items"]

Easy and effective.

Cloudflare ✅

Let’s check if we can use the same approach with Cloudflare, testing the website harrods.com.

Unluckily, it doesn’t work as before, we hit the wall while loading the home page.

Playing around with the browsers supported by the package and the operating system to mock, I’ve modified the starting loop as follows:

session=hrequests.firefox.Session(os='mac')

url='https://www.harrods.com/'

cloudflare_test=session.get(url)

page = cloudflare_test.render(mock_human=True, headless=False)

#print(page.content)

url='https://www.harrods.com/en-it/shopping/women-clothing?icid=megamenu_shop_women_clothing_all-clothing&pageindex=1'

check=0

while check == 0:

page.goto(url)

interval=randrange(20,30)

time.sleep(interval)

In this way, we’re opening a Firefox browser window and load a product category in it.

It seems we’re bypassing both the Cloudflare Turnstile check and the hard block by Cloudflare we usually get on this website.

I only needed to add a random sleep interval between pages since the browser has some difficulty in rendering the pages and sometimes it could happen that we’re starting to parse the HTML before it has fully rendered.

Datadome ❌

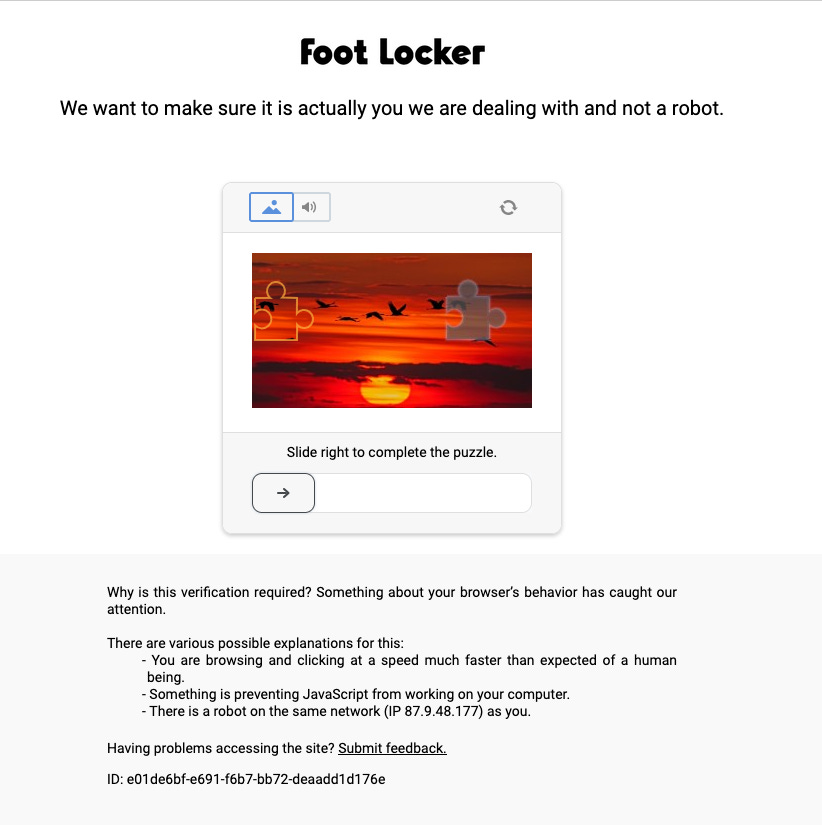

For this test, we’ll use the usual footlocker.it website, protected by both Datadome and reCAPTCHA.

[

Unfortunately, in this case, the solution tested before seems to work only once but then it triggers some red flag in the anti-bot, and from the following tries a CAPTCHA needs to be solved to proceed.

[

I’ve also added some residential proxy to bypass the block but, as imagined, we need a more human-like scraper to bypass it.

session=hrequests.BrowserSession(os='mac', browser='firefox', proxy_ip='YOURPROXYPROVIDER')

url='https://www.footlocker.it/'

cloudflare_test=session.get(url)

page = cloudflare_test.render(mock_human=True, headless=False)

interval=randrange(20,30)

time.sleep(interval)

#print(page.content)

url='https://www.footlocker.it/it/category/uomo/scarpe/sneakers.html'

check=0

while check == 0:

page.goto(url)

interval=randrange(20,30)

time.sleep(interval)

PerimeterX ✅

With PerimeterX I wanted to raise the bar a little and see if not only I could read the HTML code of the website but also the underlying APIs

So we started as we did for Datadome, targeting the Neimanmarcus.com website, but instead of looping on a product page category, we’re iterating the pages over the APIs of a product micro category.

def parse_main(self, response):

session=hrequests.BrowserSession(os='mac', browser='firefox', proxy_ip='YOURPROXY')

url='https://www.neimanmarcus.com/'

cloudflare_test=session.get(url)

page = cloudflare_test.render(mock_human=True, headless=False)

#print(page.content)

url='https://www.neimanmarcus.com/c/womens-clothing-cat58290731?navpath=cat000000_cat000001_cat58290731'

page.goto(url)

interval=randrange(20,30)

time.sleep(interval)

url='https://www.neimanmarcus.com/c/dt/api/productlisting?categoryId=cat84980747&page=1'

check=0

while check == 0:

page.goto(url)

interval=randrange(20,30)

time.sleep(interval)

The scraper has been successful, even if on some launches I crashed on the “Press and Hold” CAPTCHA typical from PerimeterX.

Kasada ❌

The final test for today is with Kasada, on the target website canadagoose.com.

[

Same approach as before but we could not load the homepage, since the anti-bot protection returns a blank screen: it means we didn’t pass the challenge by Kasada.

I’ve tried playing a little with the few options we have on the library, also not using the “mock_human” parameter in the render of the page, but nothing changed.

Test failed.

Final remarks about hrequests

I’ve discovered only recently this package and it’s been a nice surprise. We can consider it a mix of python-requests with a taste of Playwright, and in some cases, it’s really worth using it.

For example, I’m personally using it in a production environment for Akamai. The switch between the headful and the request modes, keeping the same session context, is a killer feature.

On the other side, there are few options available for the Playwright mode, making the package useless for more complex anti-bots that need a more fine-grained headful running environment, like Kasada.

As always, there’s no silver bullet in web scraping but a toolbelt where we can add our tools to choose from case to case.