THE LAB #31: Scraping location data using a world grid

Excerpt

Building a fundamental tool for scraping location data in a cost-effective way

One of the most popular dataset categories created with web scraping is location data: it may be reviews of places, accommodation prices and occupancy, store locators, and so on. However, extracting this data, particularly when coordinates are required as inputs for website APIs, presents a peculiar set of challenges.

Let’s analyze two of the most common cases we can encounter: radius-based and squares-based APIs.

Store locators - Radius-based API

One of the most common datasets about locations is about store locators: it’s used by brands and investors to understand how retail operations are proceeding: a trend of an increasing number of stores could be a good signal for the health of a retailer, while a decreasing number could be a consequence of major troubles in the company.

Since all these retail locations need to be found by people, almost every brand has its own store locator on their website, and they look pretty much the same.

[

There’s a map, a list of stores, and typically an internal API that draws dots on the map.

This case is no exception, the stores on the map are retrieved by this API call

https://www.alexandermcqueen.com/on/demandware.store/Sites-AMQ-WEUR-Site/it_IT/Stores-FindStoresData?countryCode=US

where the parameter countryCode filters the result by country.

That’s the easiest way to scrape data from a store locator, just call the API by iterating on every country of the world and you’ll get all the locations needed.

Another similar case is when we encounter websites like the following:

https://boutique.dolcegabbana.com/index.html?q=34.29208802950000%2C129.85823039930000&qp=34.29208802950000,129.85823039930000&r=65&l=en

In this case, the store discovery happens using coordinates and a radius. But with this method, how can we be sure of scraping all the locations?

Airbnb - Grid-based APIs

In my life as a “data provider”, I’ve found myself in this situation many times. In the previous example, the radius used by the API is 65 Km but, depending from website to website, this could be much smaller.

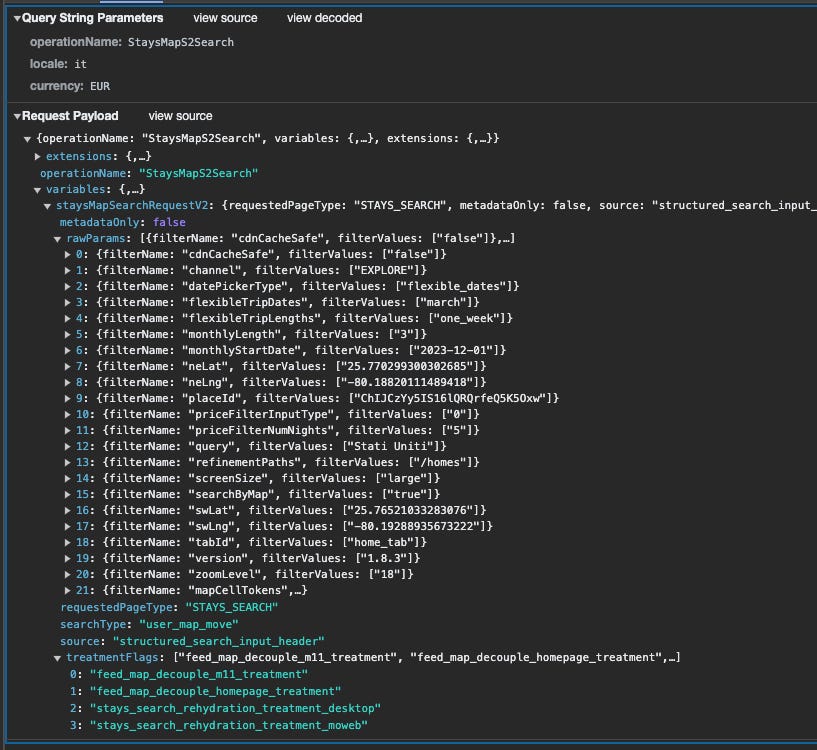

Take a look at Airbnb as an example, you can scroll the map on the website to find some accommodations, but when you zoom in, other locations previously hidden will pop up.

[

To populate this portion of Florida, the AirBnb website made a POST request to their internal API. Between different filters about the stay, we can spot the zoom level, which is analog to the radius we have seen before, and also the coordinates of a North-East and a South-West point, which are the corners of a rectangular shape which contains the results shown.

[

In fact, zooming in, the zoom level parameter increases, and the two dots are closer.

[

The need for a (smart) grid of the world

The latest two examples show us how location data is generally retrieved when displayed on a map. We can have a point and get all the places inside an imaginary circle with a determined radius. Or we have a polygon, typically a square or a rectangle, where locations are contained, and we need to pass the coordinates of at least two angles of it.

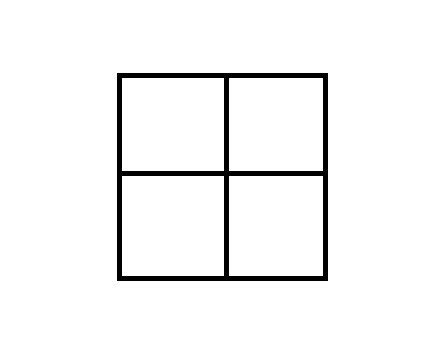

To solve both cases, we should split the world into sectors, ideally in squares, small enough for any case: using the square center, we could use it as the center of a circle with a radius (first case), while we can use its corners for API like the second case.

[

But how big should these squares be and how to create them?

It depends from case to case but we can create some tools to help us out without reinventing the wheel each time. You will find the code for these tools in The Web Scraping Club GitHub repository reserved for our paying readers. If you’re one of them and don’t have access to it, please write me at pier@thewebscraping.club

Creating the world grid

The first tool we need for our web scraping location data project is a world grid: it seems a trivial task but having one which is almost accurate at any latitude is not that easy.

The formula I’ve found online that works approximately well at any latitude is the following:

#EARTH RADIUS In METRES

R=6378137

#OFFSET (radius is our square size)

off_lat = radius/R

off_lon = radius/(R*math.cos(math.pi*nw_lat/180))

#ADD RADIUS TO LAT AND LON

next_lat=current_lat + off_lat * 180/math.pi

next_lon=current_lon + off_lon * 180/math.pi

Given the Earth’s radius, we can add X meters to a current couple of coordinates using the offsets calculated in this method and then multiply by (180/Pi).

Given that, starting from the southwest of the globe (-90,-180 of latitude and longitude), we can easily create a grid of 100 Kilometers using the program create_grid_world.py in the repository.

The initial parameters are to set up the maximum extension of the grid and to make a grid that covers the world, we need to set up the initial values as follows

#WE ARE CREATING HERE THE GRID FOR THE WHOLE WORLD, SQUARES OF 100KM PER SIDE

country_code = 'WORLD'

parent_square = -1

#EXTERNAL BOUNDARIES OF THE GRID, THESE ARE THE VALUES FOR THE WHOLE WORLD

nw_lat = 90

nw_lon= -180

se_lat = -90

se_lon= 180

#SIZE OF THE SQUARES IN METER

radius= 100000

last_id = 0

#EARTH RADIUS IN METERS

R=6378137

The output of this execution is more than ten thousand squares, each with a side of 100 kilometers.

That’s a good starting point, but we’re far from having something useful in our hands.

Making the grid smarter

As we all know, the surface of the Earth is covered by two-thirds of water, and for sure in the water, we won’t find any store or restaurant with a review.

So it’s plausible that considering deserts and other places not populated, at least half of the squares we’ve just calculated are not interesting for our scope and could be deleted from the list.

In fact, it’s our interest to have as few squares as possible in this step, since if we want to create smaller squares, their number grows exponentially.

[

Given the original square with a side of 100 Km, if we want to have more details or we have an API that works only with a side of 50 km, we should split it in four.

If we do this to the full results of the previous step, we’ll have 50.000+ squares with 50 KM side, 200.000 squares with 25km side, and so on. Consider that in the starting example of Airbnb, the size of the radius where we got less than 1000 items was around 100 meters, you can imagine what a waste of time and money it could be to go so deep in detail and keep the original grid intact.

To reduce the grid size we have two options:

-

reduce the scope

-

delete the desert squares

The first one is easy to do: instead of initializing the program with the coordinates of a rectangle containing the whole world, we could give the ones containing a single state, city, or whatever is our real scope.

Also in case our scope is a portion of the world, we can also apply the second option, deleting the desert squares.

One option to do so is to use the Google Places API to discriminate between good and desert squares. Otherwise, we could download data from OpenStreetMap or other providers and apply in bulk the same logic.

Let’s say we want to get all the squares where there’s at least one restaurant inside, we can use the Google Nearby search API, filtered for restaurants and with a radius equal to

Here’s the API call from the documentation:

curl -X POST -d '{

"includedTypes": ["restaurant"],

"maxResultCount": 10,

"locationRestriction": {

"circle": {

"center": {

"latitude": 37.7937,

"longitude": -122.3965},

"radius": 500.0

}

}

}' \

-H 'Content-Type: application/json' -H "X-Goog-Api-Key: API_KEY" \

-H "X-Goog-FieldMask: places.displayName" \

https://places.googleapis.com/v1/places:searchNearby

If we get at least one result, the square we’re analyzing is valuable, otherwise, we can consider it a desert for our scope and discard it when we need to create smaller squares.

As you might have noticed, in the output of the create_grid_world.py program there’s also a unique ID assigned to each square. This is because in this way we can create a list of “approved” squares and their id that we can split into 4.

Shrinking the grid

Let’s suppose that, in any way, we have selected some good squares for our scope and now we are going to split them into 50 Kilometres side squares.

Let’s rework the original create_grid_world.py program and create its recursive version, the create_grid_recursive.py.

In this script, we’re basically passing as input a list of selected squares instead of the whole world and creating a list of squares contained in it having half of its side.

We should expect 4 squares in output per each square in input but, due to some roundings, it happens we have 6 of them.

Depending on our need to drill down for our web scraping project, we could repeat the square selection with the Google Places API and run again this script, to get smaller and smaller squares.

Once reach the desired square size, we’ll have a grid made of squares of the world (or the area of the world of our interest), with only populated squares, and we can use it in our web scraping project. If the website has some internal API that works using a radius, like the store locator example at the beginning, we can use the square center to pass the coordinates. Instead, if it uses a box, we have all the corners of the grid and we can select the needed ones.

The great thing about this process is that it’s completely reusable on different location scraping projects. Once we created a smart grid for different square sizes, we can pick the one needed for every project without the need of reinventing the wheel again.

For any questions and feedback, as always I’m available on the Discord server and via mail at pier@thewebscraping.club