When starting a web scraping project, one of the first things to check is if there’s any internal API on the website we want to get the data from.

If there’s any, that’s the preferred choice for extracting data: it’s more lightweight both on the server and scraper side since you’re requesting less data. It’s also more efficient in terms of costs, in case you’re using some proxies billed per GB transmitted. Last but not least, it’s more reliable since APIs are less prone to changes compared to the HTML code.

In some cases, you could encounter APIs that require some authentication method to be used, like the Bearer Tokens.

What is a Bearear Token?

Let’s use the definition given on the Swagger Website:

Bearer authentication (also called token authentication) is an HTTP authentication scheme that involves security tokens called bearer tokens. The name “Bearer authentication” can be understood as “give access to the bearer of this token.” The bearer token is a cryptic string, usually generated by the server in response to a login request. The client must send this token in the

Authorizationheader when making requests to protected resources:

Authorization: Bearer <token>

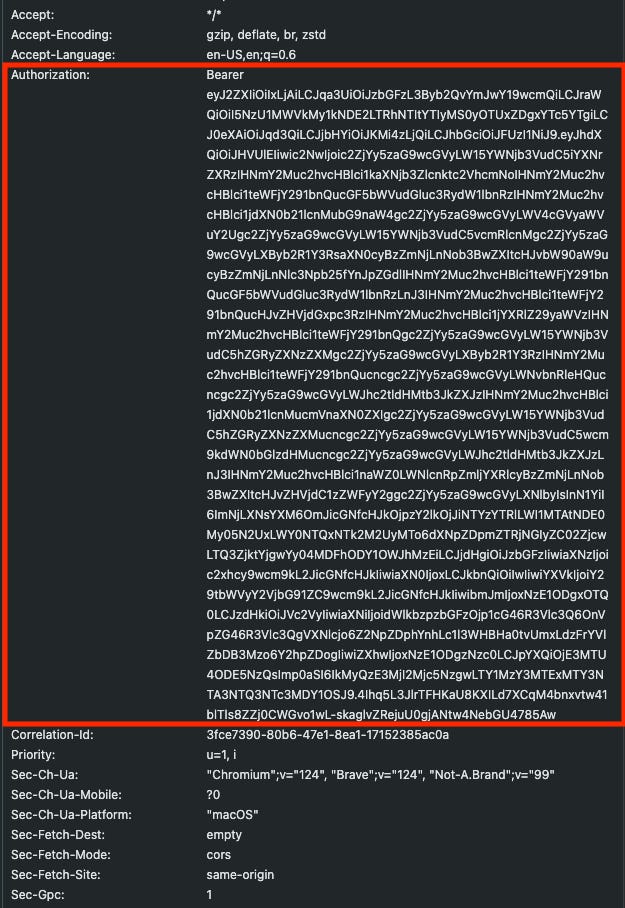

We can easily detect these API endpoints from the network inspector in the browser:

[

In these cases, we cannot access the API endpoint with a simple GET request (for example, loading the URL from the browser), but we need to understand how this token is generated and try to replicate the mechanism.

But before starting with a real-world example, it’s important to understand that the Bearer authentication method is only one of the many you can encounter.

Differences with Castle antibot tokens

Castle.io is an anti-bot solution used also to protect API endpoints. Even in this case, when we need to make a request to the endpoint, we need to pass a token inside the headers, called x-castle-request-token.

Differently from the Bearer one, where we can have a look at the website behavior and replicate it in our scraper, the Castle tokens are generated using some information from our browser, so we cannot create a new one unless we reverse-engineer the whole anti-bot solution.

These guys at Takion seem to have done it ( I still haven’t tried their solution) but this can be an overshoot if the data you need can be read elsewhere.

How to handle Bearer Tokens in scraping

As we just mentioned, if we encounter an API endpoint requiring a Bearer token, we don’t need to reverse engineer anything, but we need to inspect carefully the network listener, in order to understand how the authentication works.

We could divide this process into three steps:

-

The token request, where the website calls a first endpoint in order to generate a token

-

The token parsing, where the website receives the JSON containing the token and reads it

-

The final API call, where the website adds the token in the headers of the target API call and get the data needed to be loaded in its page.

All these steps can be seen from the network tab inside the developer’s tools, like the following example will show us.

We’ll create a scraper that uses the internal API of an e-commerce website to scrape data efficiently by implementing the previous three steps.

As always, if you want to have a look at the code, you can access the GitHub repository available for paying readers. You can find this example in the folder named 51.BEARER

If you’re one of them but don’t have access to it, please write me at pier@thewebscraping.club to obtain it.

Finding the API containing the data we need

In this example, we’ll use the Loewe e-commerce website as a case study for this technique.

When browsing a product category, we can find the following call:

https://www.loewe.com/mobify/proxy/api/search/shopper-search/v1/organizations/f_ecom_bbpc_prd/product-search?siteId=LOE_USA&refine=htype%3Dset%7Cvariation_group&refine=price%3D%280.01..1370000000%29&refine=cgid%3Dwomen&refine=c_LW_custom_level%3Dwomen&currency=USD&locale=en-US&offset=32&limit=32&c_isSaUserType=false&c_countryCode=US

which returns, at least in the browser, a JSON containing the first page of the product shown for that category in the US.

Despite being a GET call, so there’s no need to pass any payload, if we enter this URL in the browser’s address bar, we get this error:

{"title":"Unauthorized","type":"https://api.commercecloud.salesforce.com/documentation/error/v1/errors/unauthorized","detail":"Unauthorized request"}

The reason is quite simple: we need to pass the Bearer token in the headers as the website does.

[

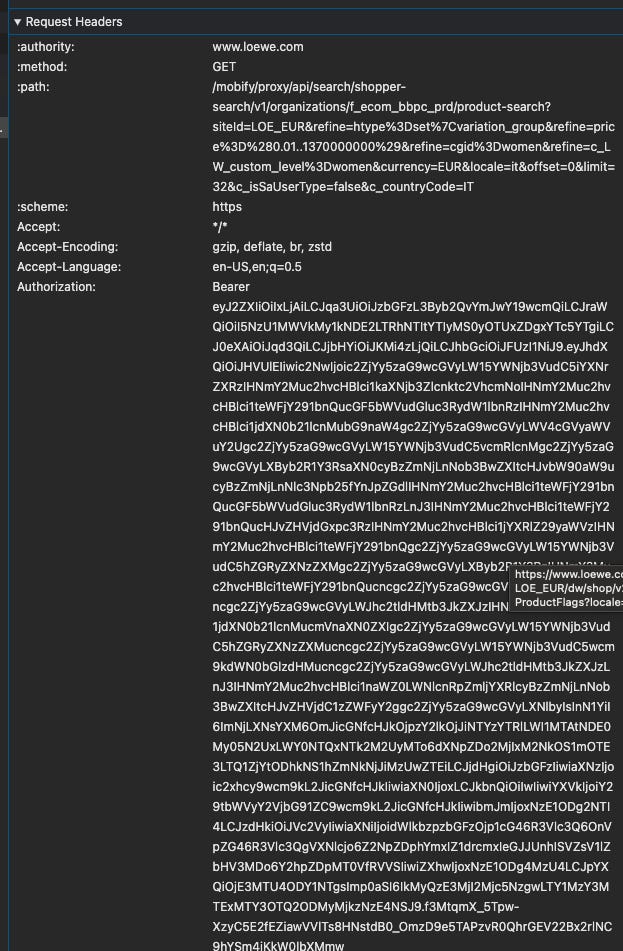

How to generate the token

The first step is to figure out how to generate this token and the easiest way to do it is to look for the token string using the search function in the network tab (Control-F).

We should find the first call where the token is not used in the headers but can be found in the response, like in this case:

[

This is the result of a POST call to the following endpoint:

https://www.loewe.com/mobify/proxy/api/shopper/auth/v1/organizations/f_ecom_bbpc_prd/oauth2/token

that need some parameters to work:

-

client_id: which seems to be a fixed string, at least when making calls from my laptop, so we’ll hardcode it

-

channel_id: which depends on the country of the website we’re scraping

-

grant_type: refresh_token, which is the action we want to take

-

refresh_token: an hashed string, that we need to understand how to get.

Please note that this refresh action happens after 30 minutes after you’ve loaded the website’s page in the browser after the first token has expired. The same endpoint is used to generate the first token, as soon as you enter the website, by changing the parameter grant_type to authorization_code_pkce and adding other values we don’t care about. Once we understand how the refresh of the token is made, we can use this method as soon as we enter the website.

[

Again here we have a string in the refresh_token field that we need to understand how to get.

Just like we did before, we need to inspect the network tab in order to find it and we immediately realize that it’s a sort of session ID stored in our cookies as soon as we enter the website, under the key cc-nx-g.

So the logical steps our scraper needs to take are the following:

-

enter the home page and store all the cookies

-

read the cookies and store in a variable the string in the key cc-nx-g

-

use this string in the parameters for calling the refresh token API

-

read its response to store the Bearer token

-

use the Bearer token to finally call the product list API

Let’s do the code step by step.

Reading cookies from the Scrapy spider

As we’ve seen before, the first thing to do is to load the home page: we’ll use an external file to pass the home page URL and some other variable that we’ll need later in the scraper.

def start_requests(self):

for i, row in enumerate(self.LOCATIONS):

url, loc, country, currency, site_id, locale,country2 = row.split(',')

cookies={

'dispatchSite':loc,

'_abck':'2F3B4ACD2FE4D7B46B67DF1B41C41094~0~YAAQxRRlX6Ph55mOAQAAmIIarwsuEMCTBTRDuBAE37pdgzxnSncGgGwB1MTAjZkdOGAXljdEpzahXdK1yNCRZKaZ4/K/gn9w75v1eC43iWvWVi5bv2mkxrlMx9Y3lxpTsHM+ER5yLK+MClpc7X0ch4FKyFUAl/wodJ3Rgt2mKGPIxlpfIou4xVHXfvxywtqw2piFFCSmUcOjCS6z2K0Xp/hmCohpwP0Ghr7PHN3RgcKysvq8K80UQrLAKVKzwIvceOGznqnGCMUD/9Xkr9TUj6KXslz1iyoVmlB7Iyj7q2U7AwtSgD/qDcGbBozwgIDljbVf0yJnF8OUyUfBtmkVIw1/YGN+jfAaek/STKru0uXSw/vD6b4YQtbfsR8uzxv63EAPVwO0qs7hJ9j094NTuQrBl2uncbI=~-1~-1~-1'

}

yield Request(url, callback=self.get_session_cookie, headers=self.HEADER, cookies=cookies, meta={'location': loc.strip(), 'cookiejar': i, 'country':country, 'currency':currency,'site_id':site_id,'country2':country2.strip(), 'locale':locale}, dont_filter=True)

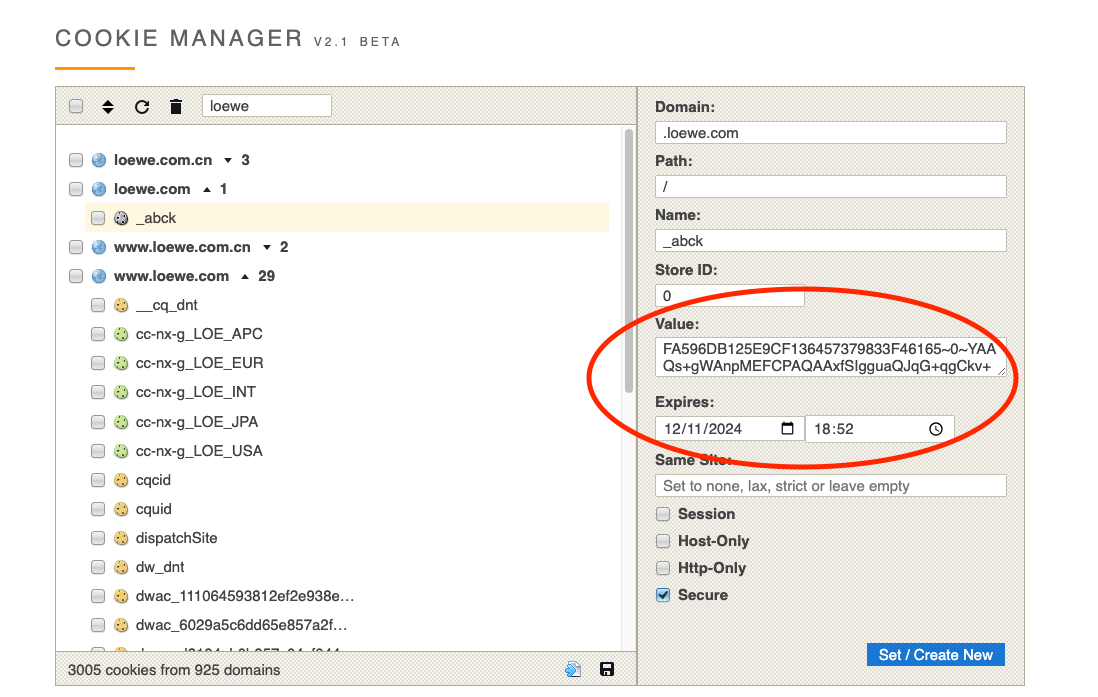

The website is protected by Akamai, but using the Cookie Manager Browser Extension we can see that its “challenge OK” token lasts for six motnhs. Since in this article we’re not focusing on Akamai, we can simply pass this cookie in the scraper to bypass Akamai without any issue.

[

Reading the cookies and refreshing the token

After we successfully load the home page of the website, we have all the initial cookies set in place. What we have to do now is to extract the cc-nx-g value and use it in the payload of the refresh token API call.

To get this value, we’re reading all the response headers we got from loading the home page and filtering by the “Set Cookies” ones.

def get_session_cookie(self, response):

site_id=response.meta.get('site_id')

cookies= response.headers.getlist('Set-Cookie')

for cookie in cookies:

print(cookie)

if 'cc-nx-g' in str(cookie):

sessionid=str(cookie).split('=')[1].split(';')[0]

We can get the list of the cookies set using the

response.headers.getlist('Set-Cookie')

command.

By iterating the results, we encounter the cc-nx-g string and store its value in the sessionid variable.

Now we’re ready to refresh the token we’re looking for.

data='grant_type=refresh_token&refresh_token='+sessionid+'&client_id=2b563a4e-b510-4143-97e1-f45415963e21&channel_id='+site_id

url='https://www.loewe.com/mobify/proxy/api/shopper/auth/v1/organizations/f_ecom_bbpc_prd/oauth2/token'

yield Request( url, method='POST', body=data, callback=self.read_token, meta=response.meta, headers=self.TOKEN_HEADERS )

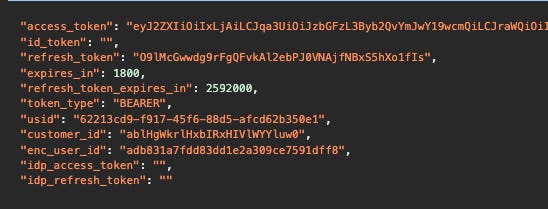

And here’s the result:

{

"access_token": "eyJ2ZXIiOiIxLjAiLCJqa3UiOiJzbGFzL3Byb2QvYmJwY19wcmQiLCJraWQiOiI5NzU1MWVkMy1kNDE2LTRhNTItYTIyMS0yOTUxZDgxYTc5YTgiLCJ0eXAiOiJqd3QiLCJjbHYiOiJKMi4zLjQiLCJhbGciOiJFUzI1NiJ9.eyJhdXQiOiJHVUlEIiwic2NwIjoic2ZjYy5zaG9wcGVyLW15YWNjb3VudC5iYXNrZXRzIHNmY2Muc2hvcHBlci1kaXNjb3Zlcnktc2VhcmNoIHNmY2Muc2hvcHBlci1teWFjY291bnQucGF5bWVudGluc3RydW1lbnRzIHNmY2Muc2hvcHBlci1jdXN0b21lcnMubG9naW4gc2ZjYy5zaG9wcGVyLWV4cGVyaWVuY2Ugc2ZjYy5zaG9wcGVyLW15YWNjb3VudC5vcmRlcnMgc2ZjYy5zaG9wcGVyLXByb2R1Y3RsaXN0cyBzZmNjLnNob3BwZXItcHJvbW90aW9ucyBzZmNjLnNlc3Npb25fYnJpZGdlIHNmY2Muc2hvcHBlci1teWFjY291bnQucGF5bWVudGluc3RydW1lbnRzLnJ3IHNmY2Muc2hvcHBlci1teWFjY291bnQucHJvZHVjdGxpc3RzIHNmY2Muc2hvcHBlci1jYXRlZ29yaWVzIHNmY2Muc2hvcHBlci1teWFjY291bnQgc2ZjYy5zaG9wcGVyLW15YWNjb3VudC5hZGRyZXNzZXMgc2ZjYy5zaG9wcGVyLXByb2R1Y3RzIHNmY2Muc2hvcHBlci1teWFjY291bnQucncgc2ZjYy5zaG9wcGVyLWNvbnRleHQucncgc2ZjYy5zaG9wcGVyLWJhc2tldHMtb3JkZXJzIHNmY2Muc2hvcHBlci1jdXN0b21lcnMucmVnaXN0ZXIgc2ZjYy5zaG9wcGVyLW15YWNjb3VudC5hZGRyZXNzZXMucncgc2ZjYy5zaG9wcGVyLW15YWNjb3VudC5wcm9kdWN0bGlzdHMucncgc2ZjYy5zaG9wcGVyLWJhc2tldHMtb3JkZXJzLnJ3IHNmY2Muc2hvcHBlci1naWZ0LWNlcnRpZmljYXRlcyBzZmNjLnNob3BwZXItcHJvZHVjdC1zZWFyY2ggc2ZjYy5zaG9wcGVyLXNlbyIsInN1YiI6ImNjLXNsYXM6OmJicGNfcHJkOjpzY2lkOjJiNTYzYTRlLWI1MTAtNDE0My05N2UxLWY0NTQxNTk2M2UyMTo6dXNpZDo2MjIxM2NkOS1mOTE3LTQ1ZjYtODhkNS1hZmNkNjJiMzUwZTEiLCJjdHgiOiJzbGFzIiwiaXNzIjoic2xhcy9wcm9kL2JicGNfcHJkIiwiaXN0IjoxLCJkbnQiOiIwIiwiYXVkIjoiY29tbWVyY2VjbG91ZC9wcm9kL2JicGNfcHJkIiwibmJmIjoxNzE1ODg4NDYwLCJzdHkiOiJVc2VyIiwiaXNiIjoidWlkbzpzbGFzOjp1cG46R3Vlc3Q6OnVpZG46R3Vlc3QgVXNlcjo6Z2NpZDphYmxIZ1drcmxIeGJJUnhISVZsV1lZbHV3MDo6Y2hpZDogIiwiZXhwIjoxNzE1ODkwMjkwLCJpYXQiOjE3MTU4ODg0OTAsImp0aSI6IkMyQzE3MjI2Mjc5NzgwLTY1MzY3MTExMTY4MTU5MTk1OTk2NTMxNyJ9.CwaqSbSgAs1TcJeCckQ-XiV3FxadyPFpX7xudNoaqmXAymBXaYUY65vstTdvV2M01AxmrxiAiVp5I1yGbll86w",

"id_token": "",

"refresh_token": "KJhLp00yFQw8CgYXrke-5wVOKUOs-2EHS3FUEnstGwU",

"expires_in": 1800,

"refresh_token_expires_in": 2592000,

"token_type": "BEARER",

"usid": "62213cd9-f917-45f6-88d5-afcd62b350e1",

"customer_id": "ablHgWkrlHxbIRxHIVlWYYluw0",

"enc_user_id": "adb831a7fdd83dd1e2a309ce7591dff8",

"idp_access_token": null,

"idp_refresh_token": ""

}

We’ve got our access token and the information that it will last 1800 seconds, a time long enough to scrape the whole website.

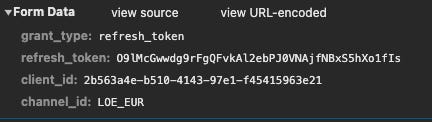

Parsing the response and using the token

The hardest part of this project is done: now we need to parse the JSON response and place the token in the headers for the final API call.

def read_token(self, response):

json_data=json.loads(response.text)

print(json_data)

token=json_data['access_token']

self.API_HEADERS={

"accept": "*/*",

"accept-language": "en-US,en;q=0.5",

"authorization": "Bearer "+token,

"correlation-id": "6fc9aa0c-535a-44c7-80bc-61db6844dd1b",

"priority": "u=1, i",

"sec-ch-ua": "\"Chromium\";v=\"124\", \"Brave\";v=\"124\", \"Not-A.Brand\";v=\"99\"",

"sec-ch-ua-mobile": "?0",

"sec-ch-ua-platform": "\"macOS\"",

"sec-fetch-dest": "empty",

"sec-fetch-mode": "cors",

"sec-fetch-site": "same-origin",

"sec-gpc": "1"

}

site_id=response.meta.get('site_id')

currency=response.meta.get('currency')

country2=response.meta.get('country2')

locale=response.meta.get('locale')

print(country2)

categories = ['women', 'men','w_paulas', 'm_paulas','gifts']

for cat in categories:

url='https://www.loewe.com/mobify/proxy/api/search/shopper-search/v1/organizations/f_ecom_bbpc_prd/product-search?siteId='+site_id+'&refine=cgid%3D'+cat+'&currency='+currency+'&locale='+locale+'&offset=32&limit=32&c_isSaUserType=false&c_countryCode='+country2.strip()

yield Request(url, callback=self.parse_category, headers=self.API_HEADERS, meta=response.meta)

After reading the JSON we’re setting a global variable called API_HEADERS that contains the Bearer token and we’ll use it as headers in every API call we’re making to read prices.

We’re also using the parameters we passed at the beginning of the scraper to complete the endpoint URL.

After some trial and error, I’ve been able to reduce the parameters needed to complete the URL to the minimum and discover the macro product categories available on the website so that we can iterate on them.

Reading the items

The remaining part of the scraper is a piece of cake as we just need to parse the JSON response of the API to collect the items and eventually move to the next page when we feel there could be some more products to show.

def parse_category(self, response):

country=response.meta.get('country')

json_data=json.loads(response.text)

for hit in json_data['hits']:

try:

product_code = hit['productId']

except:

product_code=self.DEFAULT_VALUE #IF XPATH OR JSON FIELD DOES NOT EXIST, WRITE THE DEFAULT VALUE IN THE FIELD

try:

fullprice = hit['c_gtm_data']['price']

except:

fullprice=0

try:

price = hit['c_gtm_data']['price']

except:

price =0

.....

website = 'LOEWE'

data = (datetime.now()).strftime("%Y%m%d")

with open("final_output.txt", "a") as file:

csv_file = csv.writer(file, delimiter="|")

csv_file.writerow([product_code,gender,fullprice,price,currency,country,product_url,brand,website,data, category1_code,category2_code,category3_code, imageurl, title])

file.close()

if len(json_data['hits']) == 32:

new_offset=32+json_data['offset']

new_url=response.url.split('&offset=')[0]+'&offset='+str(new_offset)+'&limit='+response.url.split('&limit=')[1]

yield Request(new_url, callback=self.parse_category, headers=self.API_HEADERS, meta=response.meta)

In fact, the API returns only 32 items per call, as specified in the limit parameter. So, if we get 32 items in the result, probably there could be more on the next page, so we increase the offset in the call by 32.

That’s it, I hope this article will be helpful in your future projects, you can find the full scraper code in the GitHub repository available for paying readers. You can find this example in the folder named 51.BEARER