Bypassing Kasada for web scraping 2024 edition

Excerpt

Another articles with tools and techniques to bypass an anti-bot: how we can bypass Kasada with Undetected Chromedriver, Playwright or commercial tools?

Let’s continue this 2024 by writing another article where we bypass an anti-bot solution to get some public data, like prices from e-commerce.

After writing two posts about Cloudflare (here’s part one and at this link you can find part two), today it’s the turn of Kasada, a niche anti-bot solution with a different approach to bot detection. It’s used by large platforms like Twitch and e-commerce websites like Canada Goose, which will be our testing target for this article

How does Kasada work?

Kasada has a peculiar way of working, compared to other solutions. Let’s consider it as a sort of firewall: instead of the connection port, it collects hundreds of data points from our browser, applies some AI, and then lets the request complete successfully or not. The core business of Kasada is fraud prevention, so I can assume that some more checks and tests are made at the moment of the login to a website or the purchase, but to me, it’s an unexplored territory so let’s keep it to what we can see when browsing public data.

When we send the first request to a website protected by Kasada we get automatically a 429 error: it’s a challenge triggered by the anti-bot, that passes our parameters to the Kasada system.

[

If the data passed by this request to the Kasada backend is coherent with a plausible fingerprint generated by a human browsing, your request gets redirected to the requested URL, otherwise, you’ll see only a white page.

This cuts out all the scrapers without a Javascript rendering engine, like Scrapy, since they get banned by default by this challenge.

Given this, I approached the scraping from canadagoose.com e-commerce website with three tools, starting with undetected-chromedriver.

All three solutions provided in this article can be found in the private repository on GitHub, available for paying users. If you’re one of them but don’t have access, please write me at pier@thewebscraping.club with your GitHub username since I need to add you manually.

First solution: undetected-chromedriver

Undetected-chromedriver is a Selenium Chromedriver patch that should not trigger anti-bot services.

If you’re not familiar with Selenium and webdrivers, let’s take a step back.

Selenium is a browser automation tool used for testing web apps: it provides a framework for interacting with web pages. It allows you to write code that can perform actions like clicking buttons, entering text, and extracting data from web pages. For this reason, it’s well-known also in the web scraping industry. To communicate with the browser it needs the so-called webdrivers, which are links between the Selenium commands and the browsers. Every browser has its webdriver: ChromeDriver for Google Chrome, GeckoDriver for Mozilla Firefox, and EdgeDriver for Microsoft Edge.

So undetected-chromedriver is a version of the standard chromedriver, more focused on the features and settings needed for web scraping.

The scraper should work as follows: load the home page, get the list of all the product categories, and iterate through it. For every product category, the scraper should scroll down to the bottom to load every product, since the page works with infinite scroll and loads more items as you scroll down if they are available. After all the items for a single category are listed on the page, then we can scrape information out from them.

Load the home page

import undetected_chromedriver as uc

from selenium import webdriver

from selenium.webdriver.common.by import By

import os

from selenium.webdriver.common.keys import Keys

import time

import csv

options = webdriver.ChromeOptions()

options.headless = False

#options.add_argument('--disable-blink-features=AutomationControlled')

driver = uc.Chrome(options=options)

driver.get("https://www.canadagoose.com/us/en/home-page")

driver.set_window_size(1280, 1024)

time.sleep(20)

After the usual imports, we create the list of options we need to pass to the undetected-chromedriver.

First of all, we need to set False to the headless attribute, since even if we enable javascript in headless mode, the anti-bot still detects a headless browser and bans us.

The other option I usually add is ‘—disable-blink-features=AutomationControlled’, which disables the banner on the browser that states it’s controlled by an automation tool. I’m not aware of any anti-bot detecting this feature but in order to make the session similar to a human-controlled one, I always disable this feature. With undetected-chromedriver, this is not needed since it’s disabled by default.

Closing the popup and selecting the categories

driver.find_element(By.XPATH,'//button[@id="onetrust-accept-btn-handler"]').click()

time.sleep(20)

categories=driver.find_elements(By.XPATH,'//li[contains(@class, "secondlevelcat")]/a')

cat_list=[]

for cat_url in categories:

print(cat_url.get_attribute("href"))

cat_list.append(cat_url.get_attribute("href"))

The first thing I do after loading the homepage is to close the popup that opens when the homepage is loaded for the first time.

Then, I’m creating a list of all the URLs of the product categories, so I can use it as input for the second part of the scraper.

Scrolling down the categories and parsing the HTML

for cat in cat_list:

driver.get(cat)

i=0

#scroll down for 100 times

while i < 100:

driver.find_elements(By.TAG_NAME,'body')[0].send_keys(Keys.PAGE_DOWN)

i=i+1

time.sleep(0.5)

time.sleep(5)

products=driver.find_elements(By.XPATH,'//div[contains(@class, "product h-100")]')

for product in products:

product_code = product.find_element(By.XPATH,'.').get_attribute("data-pid")

product_price= product.find_element(By.XPATH,'//span[@class="actual-price"]/span').get_attribute("content")

product_name= product.find_element(By.XPATH,'.//a').get_attribute("title")

image= product.find_element(By.XPATH,'.//img').get_attribute("src")

itemurl= product.find_element(By.XPATH,'.//a').get_attribute("href")

country= driver.find_element(By.XPATH,'//span[contains(@class, "selected_country")]').text.strip()

currency = product.find_element(By.XPATH,'.//span[@itemprop="priceCurrency"]').get_attribute("content")

with open("file_output.txt", "a") as file:

csv_file = csv.writer(file, delimiter="|")

csv_file.writerow([product_code,product_price,product_name,itemurl,image, country, currency])

file.close()

Now we’re reading one URL at a time from the list created in the previous step.

To scroll down until the end of products I’ve used the trick of simulating 100 times the Page Down button click. It could be done more elegantly and dynamically but I could not find a suitable element for suggesting to the program how much it should keep scrolling down, so I’ve used a fixed number of times based on the size of the pages.

The rest of the scraper is plain vanilla HTML parsing with undetected-chromedriver and output to CSV file.

Does undetected-chromedriver bypass Kasada?

Well, yes and no. If you run this script from your personal device, it should work, without the need for any proxy.

But if you do it from a data center, then this stops working, even if you’re adding a residential proxy. So probably Kasada recognizes some elements of the browser that describe the underlying machine that is not handled in this version of undetected-chromedriver, so it recognizes we’re running the scraper on a datacenter instead of a personal device.

On top, undetected-chromedriver does not support proxies that require authentication, and this is a great problem: you need to find a provider that offers you a service with no authentication from a whitelisted IP (like Smartproxy does, as an example), but there are many cases where you don’t know your IP before starting the scraping operations.

Second solution: Playwright with Brave Browser

This solution is similar to the first one, but we’re changing the tool we’re using: instead of using Selenium with undetected-chromedriver, we’re using Playwright with Brave Browser.

Playwright is another browser automation tool designed for application testing: in fact, its core job is to automate tests on websites and web apps using different browsers and emulating different device setups. You can easily understand then why it’s becoming more popular in the web scraping industry, even if it has different limitations, especially in the performances and concurrency of the executions.

Unlike Selenium, it doesn’t need any webdriver to be installed since it communicates directly with the browsers. In fact, when you install Playwright, you’ll install also the binaries of patched browsers that can be used, like Firefox, Chrome, and Safari. On top of them, you can use all the chromium-based browsers like Brave, which is my first choice since it has some native fingerprint spoofing features.

The spider can be found in our GitHub repository, under folder 38.KASADA, with the name playwright_brave.py.

A look at the code

The logic of the scraper is identical to the previous scraper, it changes only the tool for implementing it.

def scroll_down(page):

"""A method for scrolling the page."""

# Get scroll height.

i=0

#increase if number of pages per category is increased

while i < 10:

page.mouse.wheel(0,20000)

interval=randrange(3,5)

time.sleep(interval)

print(i)

i=i+1

This, for example, is the function we’ll use to scroll down the pages. Instead of sending the keyboard signal, we’ll use a more realistic mouse wheel scrolling, again for a fixed number of times.

CHROMIUM_ARGS= [

'--no-sandbox',

'--disable-setuid-sandbox',

'--no-first-run',

'--disable-blink-features=AutomationControlled'

]

browser = p.chromium.launch_persistent_context(user_data_dir='./userdata'+str(retry)+'/',channel='chrome',executable_path='/usr/bin/brave-browser', headless=False,slow_mo=200, args=CHROMIUM_ARGS,ignore_default_args=["--enable-automation"],proxy=proxy)

all_pages = browser.pages

page = all_pages[0]

page.goto(cat, timeout=0)

We’re launching Brave by specifying its executable path (which changes from OS to OS), and using a persistent context, to save the cookies saying we’ve passed the Kasada challenge.

Last but not least, something I’ve learned only recently: with browser.page I get a list of all the pages inside a BrowserContext, like the one we just created. Instead of creating a new page each time, I can use always the first page of the context for browsing the categories.

Does Playwright with Brave browser bypass Kasada?

Again, yes and no, we’ve got the same results as before. Even by adding residential proxies, the scraper runs locally but not on the server.

I’m afraid we should go for a commercial solution to bypass it.

Final solution: Playwright with Bright Data scraping browser

I’ve tried some other open-source tools to scrape Kasada, like Scrapy Impersonate or hRequests but they all failed. Even between the commercial solutions, not everything worked.

What surely worked was the Bright Data Scraping browser: it’s a sort of anti-detect browser, specifically designed for web scraping, which creates plausible fingerprints to use in your scraping projects, solves CAPTCHAs, renders Javascript, and has many other cool features that make life of developers easier.

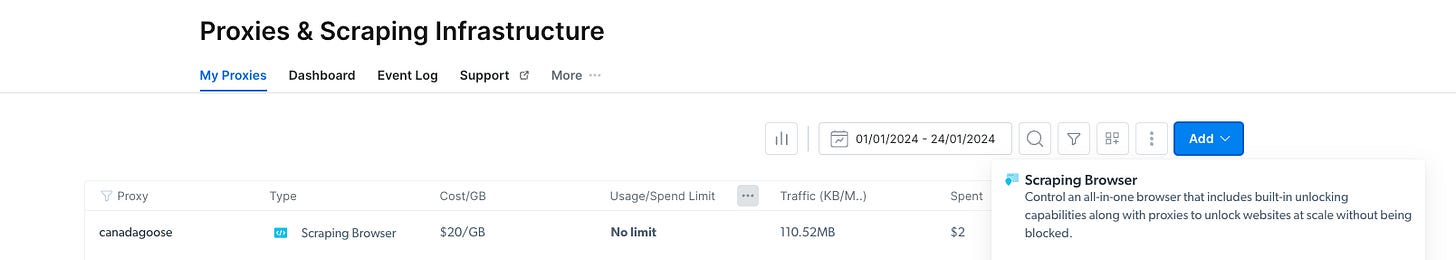

After you log in to your Bright Data account, you can create a new zone by clicking on the add button and selecting Scraping Browser.

[

Then you’ll receive the credentials for its usage and that’s it. Depending on your plan, it may cost from 20$ per GB requested to even less if you commit to more usage.

Its integration with Playwright (just like Selenium or Puppeteer) is straightforward, and it happens via Chrome DevTools Protocol.

You don’t need to install any additional package on your environment, simply plug in the scraper to the WebSocket, as we did in the file playwright_with_bd.py, and you’re ready to go**.**

If you’re aware of any other solution, especially OSS tools, please write me at pier@thewebscraping.club and I’d be happy to test it and share it with others.